Numerous researchers have used computer programs to scrutinize large volumes of text, searching for patterns or tendencies that might otherwise escape detection. One such program is Linguistic Inquiry Word Count software (LIWC), employed by scholars in diverse fields, including the legal profession.[1] Among the findings were a pair of SCOWstats posts in the spring of 2019,[2] reporting on LIWC’s analysis of Wisconsin Supreme Court opinions issued from 2015-16 through 2017-18. Three terms have passed since then, and with new justices joining the court,[3] it’s time for another look at their labors through the lens of LIWC.[4]

We’ll confine our attention to the most interesting of the diagnostic categories from the 2019 posts, starting with two of LIWC’s “summary variables”—“Analytical Thinking” and “Clout.” The “scores” for these variables are derived from proprietary algorithms described by LIWC’s creators as follows:

Analytical thinking: “A high number reflects formal, logical, and hierarchical thinking; lower numbers reflect more informal, personal, here-and-now, and narrative thinking.”

Clout: “A high number suggests that the author is speaking from the perspective of high expertise and is confident; low Clout numbers suggest a more tentative, humble, even anxious style.”[5]

Opinions will vary regarding the importance of high scores in one or another of these categories, but that should not weaken interest in the following results. They imply significant differences between the justices in some instances and should serve as food for discussion, or at least contemplation.

The raw scores returned by LIWC provide a sense of the differences among justices, but we can better illustrate the variation by employing standard deviations. Briefly put, for a collection of numbers one can compute the mean (or average) and standard deviation. This is useful because approximately 68% of a group’s numbers reside within one standard deviation above or below the mean, and roughly 95% of all the numbers fit within two standard deviations of the mean. As an example, suppose that a group of the justices’ scores has a mean of 100 and a standard deviation calculated to be 10. We then know that about 68% of the justices’ scores fall between 90 and 110, and 95% of the scores fit within two standard deviations of the mean—somewhere between 80 and 120. Thus, if LIWC informs us that a justice’s score is two standard deviations above (or below) the mean, we understand that it is a good deal higher (or lower) than most of the other justices’ scores. If a justice’s score is much less than one standard deviation above (or below) the mean, it is close to the average score for the court as a whole.

Majority opinions

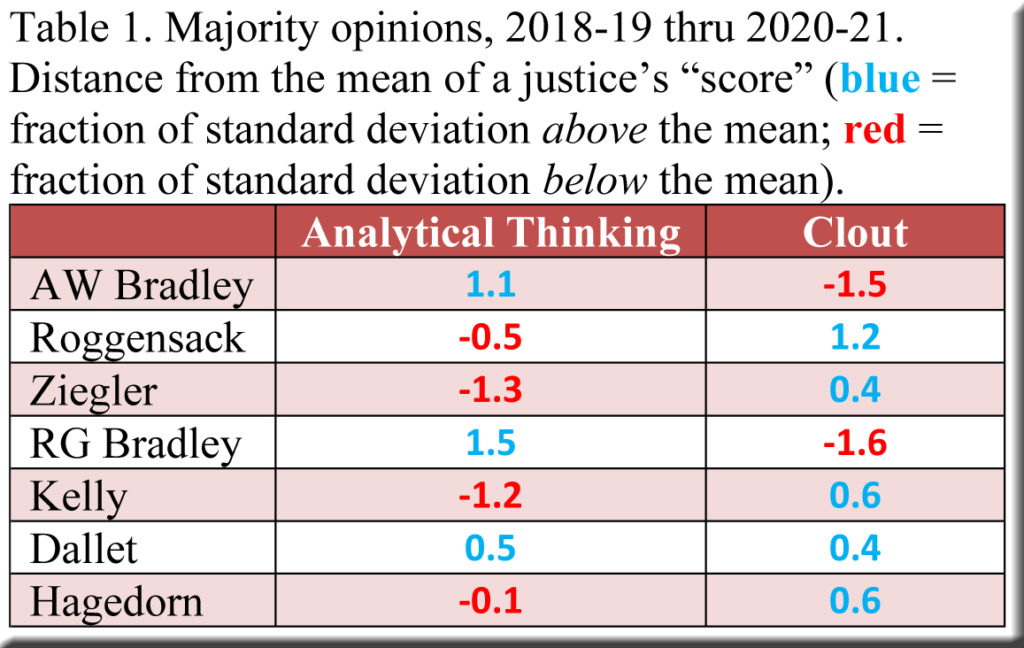

Armed with this information, let’s proceed to Table 1, which shows the justices’ LIWC scores in the “Analytical Thinking” and “Clout” categories for majority (or lead) opinions, presented in terms of standard deviations above or below the group mean.[6] For instance, Justice Ann Walsh Bradley’s score of 1.1 in “Analytical Thinking” is just over 1 standard deviation above the mean, while her score of -1.5 in “Clout” is 1½ standard deviations below the mean. In other words, her “Analytical Thinking” score sits above those of most of her colleagues, while her “Clout” score is well below most of theirs. For a different result, consider Justice Hagedorn’s score of -0.1 in “Analytical Thinking”—almost exactly at the mean. As for the largest gap between the two scores, the distinction belongs to Justice Rebecca Bradley—1½ standard deviations above the mean in “Analytical Thinking” and 1.6 standard deviations below the mean in “Clout.”

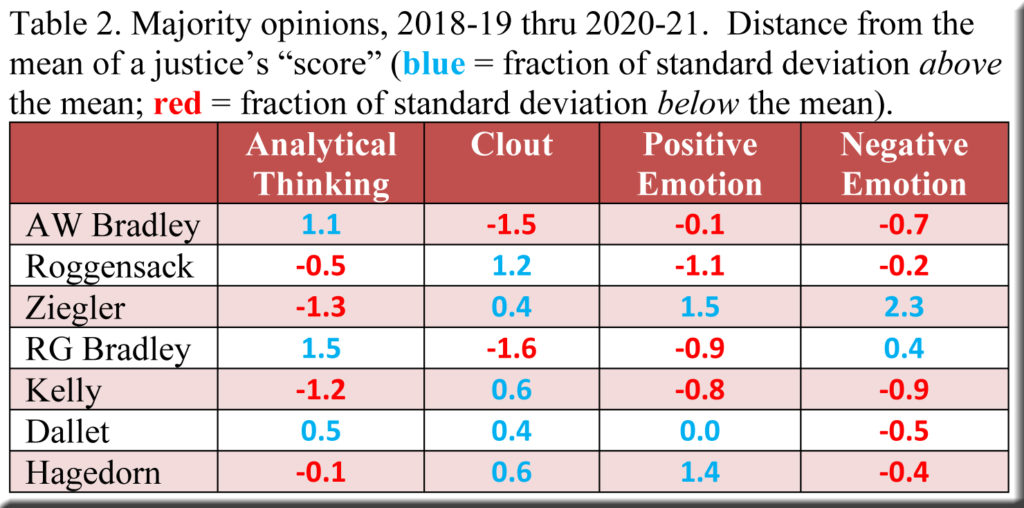

LIWC generates statistics of many other sorts—ranging from an author’s choice of punctuation marks to use of informal language—and two of these categories are of particular interest here. Labeled “Positive Emotion” and “Negative Emotion,” they show the number of words associated with various emotions as a percentage of all words employed by an author. Converting these findings to our standard-deviation format and adding them to Table 1 yields Table 2.

For both “emotion” categories, Justice Ziegler’s results catch the eye—fully 2.3 standard deviations above the mean for frequency of “Negative Emotion” words, but also 1½ standard deviations above the mean for “Positive Emotion” words. In contrast, when the table is viewed across all four columns, Justice Dallet’s scores are much closer to the mean than are those of her colleagues.

Concurrences

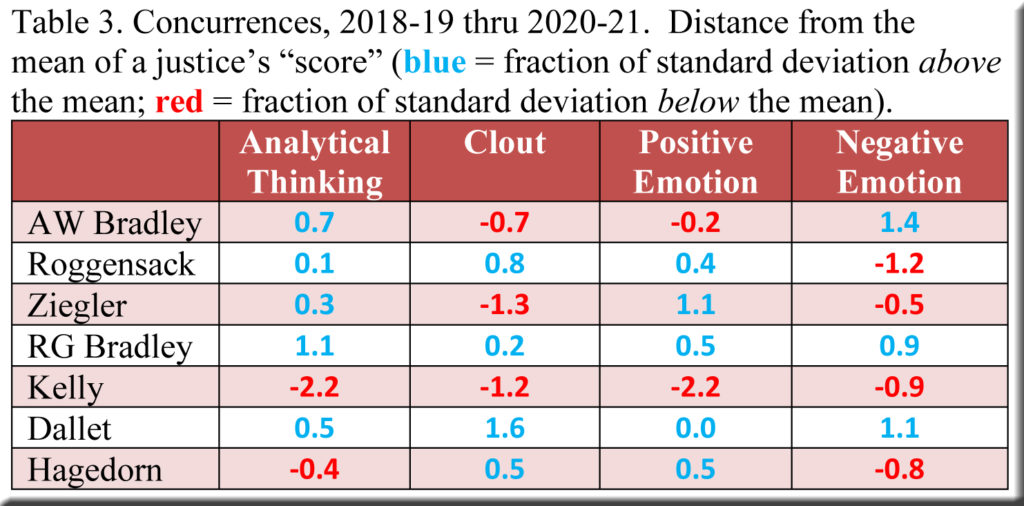

Majority opinions provide LIWC with the largest writing sample to inspect, but the justices also wrote concurrences and dissents that we can run through the software. For concurrences, LIWC’s results (after our standard-deviation processing) appear in Table 3, where Justice Kelly registered the most pronounced departures from the mean—over two standard deviations below in both “Analytical Thinking” and “Positive Emotion.” The same could not be said of Justices Rebecca Bradley and Dallet, who were at or above the mean in all four categories. Also noteworthy, LIWC scored Justice Roggensack as the least frequent user of “Negative Emotion” words in her concurrences—1.2 standard deviations below the mean—but at or above the mean in the first three columns.

Dissents

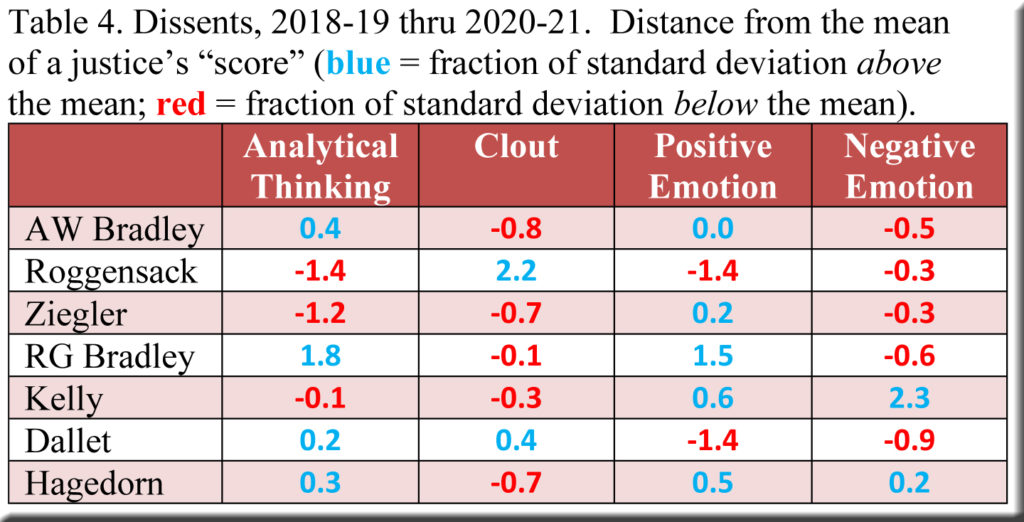

As for dissents, Table 4 finds Justices Ann Walsh Bradley and Hagedorn closest to the mean overall, with Justice Kelly poised to join them—until our gaze reaches the “Negative Emotion” category, where his dissents posted a score 2⅓ standard deviations above the mean.

Views will differ over the desirability of negative or positive emotion in a justice’s opinions. Much depends on an observer’s outlook as to whether a justice’s fervent language should be characterized as principled remonstrance or politicized truculence. LIWC does not possess the technical sophistication to address such questions, but it can suggest avenues to explore for those embarking on a deeper analysis of a justice’s writings.

[1] See for example: Kristopher Velasco, “Do National Service Programs Improve Subjective Well-Being in Communities?,” The American Review of Public Administration, January 2019; Shane A. Gleason, “The Role of Gender Norms in Judicial Decision-Making at the U.S. Supreme Court: The Case of Male and Female Justices,” American Politics Research, January 2019; Spyros Kosmidis, “Party Competition and Emotive Rhetoric,” Comparative Political Studies, January 2019; Mingming Liu et al., “Using Social Media to Explore the Consequences of Domestic Violence on Mental Health,” Journal of Interpersonal Violence, February 2021.

[2] One post considered majority opinions, while the other focused on dissents.

[3] Justices Abrahamson and Karofsky are excluded because each served on the court for only one of the three terms under consideration. Justice Kelly, who served for two of the three terms, is included.

[4] Service Employees International Union (SEIU), Local 1 v. Robin Vos and Nancy Bartlett v. Tony Evers, two severely fragmented decisions, are not included. Co-authored dissents and concurrences (very rare) are also excluded.

[5] James W. Pennebaker et al., Linguistic Inquiry and Word Count: LIWC2015 (Austin, TX: Pennebaker Conglomerates, 2015). The pages are unnumbered; see the second page from the end.

Naturally, these algorithms are not “perfect,” as noted in the initial 2019 post, but they are the product of extensive testing and have been found useful by researchers for several years. For more on the capabilities and limitations of LIWC, see (in addition to the articles cited above): Frank B. Cross and James W. Pennebaker, “The Language of the Roberts Court,” Michigan State Law Review (2014); Paul M. Collins, Jr., Pamela C. Corley, and Jesse Hamner, “The Influence of Amicus Curiae Briefs on U.S. Supreme Court Opinion Content,” Law & Society Review, vol. 49 (December, 2015); and Bryan Myers et al., “The Heterogeneity of Victim Impact Statements: A Content Analysis of Capital Trial Sentencing Penalty Phase Transcripts,” Psychology, Public Policy and Law, vol. 24 (2018). Pamela C. Corley and Justin Wedeking in “The (Dis)Advantage of Certainty: The Importance of Certainty in Language,” Law & Society Review, vol. 48 (March, 2014), address the issue of quotations included within opinions.

[6] For each justice, I collected all of his/her majority opinions and removed the introductory pages as well as any appendices and separate opinions by colleagues before running the text through LIWC.

Speak Your Mind